Info

Select topic

The AI Agent Challenge is a 6-hours online team-based competition for Replyers passionate about LLMs and AI.

Collaborators can’t participate to this edition which is only for internals, but they can join the external one planned for the next months, communications will follow.

No. To play on 12th March, you need to create your own team and invite a colleague (teams are made of 2 up to four members).

You can register until 11th March, 23:59pm, CET.

Once you’ve registered, you can form a team, invite colleagues or you can join our Discord Server to find participants to play with. If once the registrations close you still don't have a teammate, we will merge you with another team.

To update your details at any time, log in to your profile and click “Edit profile”, or follow this link.

You can access your Challenge Page and click on “Leave team” to cancel your registration to the challenge.

To form your team, log in to the Reply Challenges platform, click the “Join the challenge” button and select “Create new team”. Once you’ve formed a team, you’ll see it when you log in to the platform. You can also choose a team name and invite your friends. Just fill in their email addresses and send the invitation. Remember, this challenge is open only to Replyers.

Your team can have 2 - 4 people. Your team must be at least made of 2 members. If you're still registered alone at the end of the registration period, you'll be automatically paired with another participant to form a team of two. You'll receive an email notification with your team assignment.

You can connect to challenges.reply.com, select the AI Agent Challenge and click on Join the Challenge.

No, but you are free to leave your current team. They won’t receive any notification, so remember to tell them.

Where do I see my team?

You can see your team in your challenge page: connect to challenges.reply.com and in the homepage click on “Your Challenge Page”.

No, this edition is open to Replyers only.

We strongly recommend exploring all the materials in the Train & Learn section, including:

Learning modules (from basics to advanced topics about Agents creation and resources management)

Tutorials and instructions for the tools provided during the competition

Sandbox problems to practice and understand the challenge mechanics

You can use any programming language you're comfortable with to build your agentic system. The most commonly used languages for AI agent development are Python, JavaScript/TypeScript, but the choice is entirely up to you and your team. The only requirement is that your code must be functional and submitted as specified in the competition rules.

Langfuse is required for tracking and validation purposes only. Your score will be calculated exclusively based on the output files you submit, not on Langfuse tracking data. Make sure to include your Langfuse session ID in all submissions as per the instructions in the Learn & Train section.

The sandbox mode is a game simulation, useful to help you understand how to upload your solution.

Upload your files by dragging and dropping them or selecting them from your computer.

Training dataset: Submit an output file for each dataset.

Evaluation dataset: Submit both an output file and your source code. The source code must be provided as a zip file containing your complete agentic system with all necessary components to run it (code, dependencies list, configuration files, instructions, etc.).

Important: To ensure proper tracking according to the competition rules, you must include the Langfuse session ID in your submission. Find detailed instructions in the Learn & Train section.

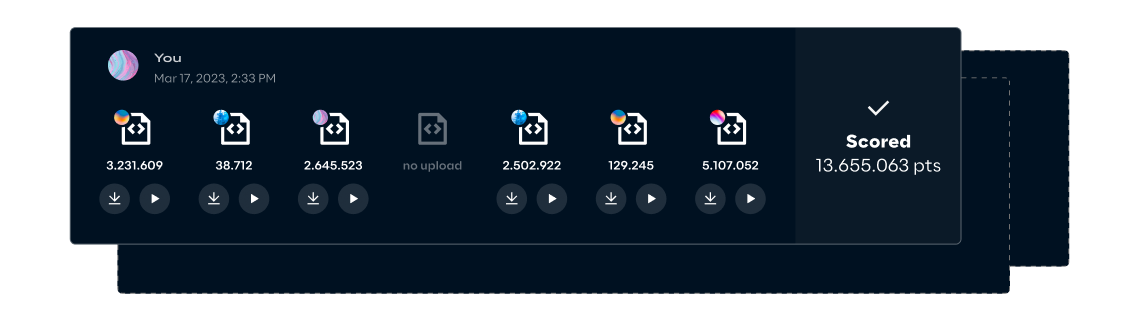

Yes. You’ll see a list of scores for all your submissions.

No, but you’ll see your submission scores.

You're free to choose the tools and frameworks that best suit your approach. However, for the competition you must use:

LLMs via API: We will provide you with an API key to access the LLMs available for the challenge

LangFuse: You must integrate LangFuse for tracking purposes following the instructions provided in the Train & Learn section

Beyond these requirements, you can use any additional libraries, frameworks, or tools to develop your agentic system.

Try reloading the page, then try clearing your cache and cookies. If you’re still having problems, message the Reply AIvengers on chat or email challenges@reply.com.

You’ll need your own computer with an internet connection.

No, the platform does not execute the code, the score is calculated using only the output files. Still, AIvengers team must be able to execute the provided code to check that the submission is correct.

The AI Agent Challenge is a 6-hour competition where your team will build an agentic system to solve a specific problem statement.

Timeline on March 12th:

⏰ Challenge starts at 15.30 PM

💯 Leadearboard frozen at 21.00 until 21.30 PM

✋ Challenge ends at 21.30 PM

🏆 Within 10 working days: podium validation & final results

In your challenge page you’ll access:

Training Dataset:

- Use these datasets to develop and refine your agentic system

- Submit outputs as many times as you want

- Check your score after each submission to track your progress

Evaluation Dataset:

- Submit your final solution only once

- Include both your output and source code (zip file with your agentic system)

- Your final score will be based solely on the evaluation dataset performance

At challenge start: Your team will have access to the first three datasets.

Once your team submits the final evaluation solution for the first three datasets, datasets 4 and 5 will be automatically unlocked.

Resources Provided:

Problem statement

API key for LLM access

Training and evaluation datasets

Token allocation for your team: your team receives a budget in two stages:

Datasets 1-3: $40 in tokens

Datasets 4-5: $120 in tokens (unlocked only after submitting evaluation solutions for datasets 1-3)

Token monitoring dashboard

Find all materials, instructions, and tools in the competition page and Train & Learn section.

Token management is a strategic component of the challenge. Once your allocated budget is exhausted, it cannot be refilled. Use your tokens wisely and plan your approach carefully!

You can message the AIvengers team via chat (Discord).

Yes! You're free to use any development and execution environment you prefer.

The only requirements are:

You must include the Langfuse session ID for tracking (see instructions in the Train & Learn section)

Your final submission for the evaluation dataset must include both output and source code (zip file with your agentic system)

Yes, it’s an online-only competition.

We’ll update the leaderboard regularly to show how teams are performing. We’ll also freeze it 30 minutes before the challenge deadline (but we’ll continue to update scores).

Upload your files by dragging and dropping them or selecting them from your computer.

Training dataset: Submit an output file for each dataset.

Evaluation dataset: Submit both an output file and your source code (as a zip file containing your agentic system).

Important: To ensure proper tracking according to the competition rules, you must include the Langfuse session ID in your submission. Find detailed instructions in the Train & Learn section.

It depends on the dataset:

Training dataset: Unlimited submissions. You can upload and test your solutions as many times as you want to refine your agentic system and track your progress.

Evaluation dataset: One submission only. You can upload your final solution only once, so make sure it's complete and tested before submitting.

Your final score will be based solely on your evaluation dataset submission.

Your score is based on three key criteria:

Detection Accuracy (30%) How well your system distinguishes between fraudulent and non-fraudulent transactions.

Economic Impact Assessment (30%) The financial consequences of errors — prioritizing the prevention of high-cost fraudulent activities and operational losses.

Agentic Efficiency and Optimization (40%) Your system's speed, cost-effectiveness, and architectural complexity. Both over-engineered and overly simplistic solutions will be penalized.

Benchmark & Bonus:

All metrics are evaluated against an optimal benchmark solution. Solutions that outperform this benchmark receive additional credit.

Dataset Difficulty:

Each dataset has a weighted scoring system where more complex datasets offer higher maximum points.

Your submission will be rejected if it contains any of the following errors:

Missing output files: All required output files must be included

Missing source code: Evaluation dataset submissions must include source code

Missing Langfuse session ID: Required for tracking and validation

Corrupted zip file: Ensure your zip file is properly compressed and can be extracted

Incomplete system: Missing dependencies, configuration files, or instructions

Double-check your submission before uploading, especially for the evaluation dataset (you only get one chance!).

Langfuse is required for tracking and validation purposes only. Your score will be calculated exclusively based on the output files you submit, not on Langfuse tracking data. Make sure to include your Langfuse session ID in all submissions as per the instructions in the Train & Learn section.

At the end of the code game, the AIvengers team will review and validate the best scoring submission from top-ranked teams on the leaderboard analyzing the source code files. The Reply teams’ decisions regarding the rules of this AI Agent competition are final.

We’ll publish a full list of results and notify all finalists no later than 10 working days after the day of the challenge.

Each member of the first team will an iPhone 17 Pro. Each member of the second team will win a Playstation 5 Slim, while each member of the third team will receive a pair of Rayban Meta.

You’ll get some emails before and after the challenge, so check your mailbox regularly. You can always message the AIvengers during the challenge via chat (Discord) if you have questions.

All communications will be in English. Though you and your teammates can speak whatever language(s) you like! 😊

Reply AIvengers write the problems and are responsible for enforcing all challenge rules. They’ll review submissions from teams and award prizes. They may exclude any participants or teams at any time, for breaching competition rules.

We want to make training sessions and the challenge fair for everyone. So never stop others from taking part – for instance, by overloading the challenge platform, or sending files containing malware, viruses or other code intended to interrupt, destroy or limit the operation of platform, software, hardware or telecoms equipment. This will result in instant disqualification.

Additional actions to ensure a fair competition for all participants:

No solution sharing: Sharing solutions, code, or outputs between teams is strictly prohibited

Each team must develop their own independent solution

Violations will result in disqualification

If you’ve spotted any cheating or unfair behaviour, email challenges@reply.com